In this article, I'm going to talk about the potential ways we can use Explainable AI to improve the understanding of AI models by humans without formal training or prior experience in Artificial Intelligence methods and techniques. We are going to cover multiple XAI methods, from the simplest like constraints to GANs. After reading this article you will know what these are and be able to determine when, which and how to apply these techniques to understand how algorithms came up with their final answer.

What is Explainable AI?

In the past few years, I've noticed that AI has become ubiquitous in all areas of our lives. There is hardly any social, cultural, or economic interaction between people that AI has not already touched in some shape or form. What was science-fiction a couple of decades ago for me, has become a reality. AI surrounds us, makes decisions for us, simplifying many aspects of our lives. It can get scary for a lot of people, and I as a Data Scientist with over 30 years of experience still don't have all of the answers. So, can we afford to give up that much control over our daily lives to a technology hardly anybody understands? Let me try to explain.

I personally believe that in realms such as healthcare, finance, and the law, the answer is no. We need to understand the reasons behind decisions made on our behalf by doctors, financial institutions, or judges. And recent laws have been passed in a few countries to protect our right to have those reasons explained clearly in writing. To comply with those laws, the AI algorithms making those decisions need to explain how they arrived at them in the first place. This capability is known as Explainable AI, or XAI for short.

Why use XAI?

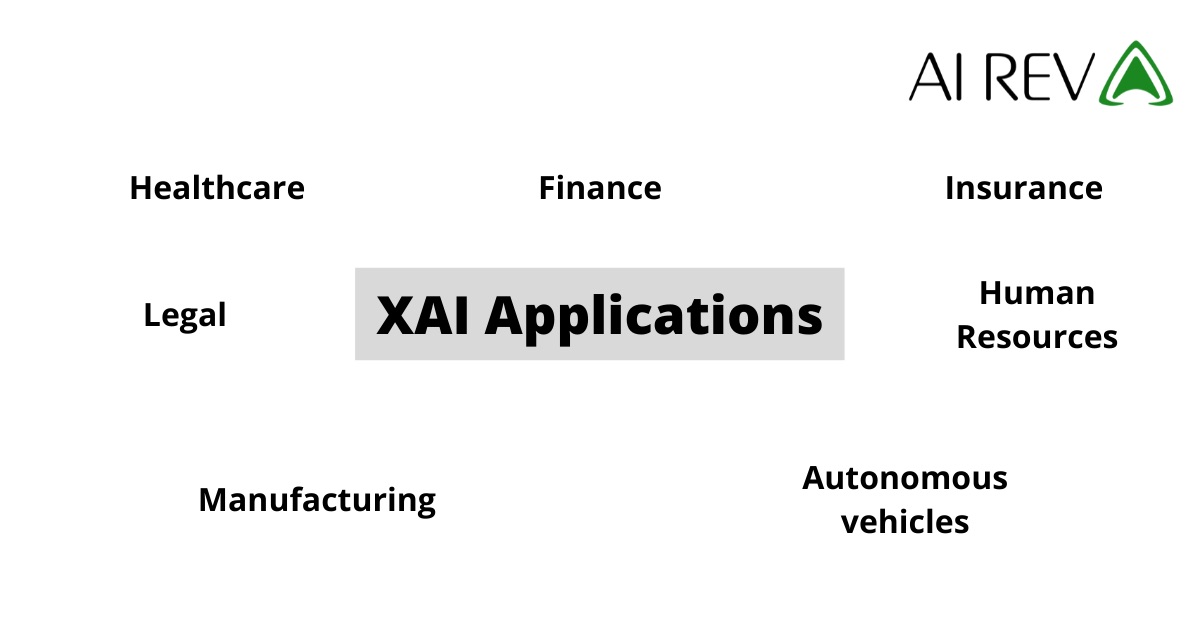

In healthcare, for example, my peers use XAI to justify/explain medical decisions based on lab exams, CAT scans, MRIs, and X-rays or automated decisions made by medical equipment. The insurance industry uses XAI to justify/explain underwriting decisions on granting policies or payments. The finance industry requires XAI to explain automated decisions on bank loans, stock trades, assets, and risk management.

To comply with regulations, courts and governments use XAI to explain legal/civil decisions made by automated software. Human Resources departments use XAI to avoid hiring bias, and manufacturing uses it to justify/explain collective/group manufacturing decisions that affect production and quality. Finally, autonomous vehicles such as Tesla now use XAI to justify driving decisions made by the car during an accident. These are in my opinion some of the examples where XAI is necessary.

Many of the algorithms used in AI are, by their very nature, easy to explain, such as decision trees and clustering. But other, more powerful algorithms, such as neural networks and genetic algorithms, are not so easy to explain even for someone with my experience.

Explainable AI (XAI) comprises many methods to make most AI algorithms explainable, except genetic algorithms. The latter evolve through many generations into ever-fitter algorithms based on random mutations of their code.

XAI-ready versions of many algorithms are readily available as libraries and packages. Consider using them to write any new applications that require XAI. However, there are some AI algorithms and models for which no XAI-ready versions are available. Let's call them "black-box" algorithms and models.

They can get really complicated and that is why they need external techniques that test their decision boundaries. These tests offer a glimpse into the black box's inner workings and do not necessarily require modification to the existing algorithms or models. This is where it starts to become problematic for all of us.

You can think of this "probing" XAI approach as a form of "psychoanalysis" for black-box algorithms/models. And just like human psychoanalysis, XAI uses many different methods to probe various aspects of the black box's "psyche." They allow us to "interpret" the "inner workings" of the model or algorithm, and so they are known as interpretability. These methods follow three basic approaches: constraint, perturbation, and generative adversarial networks or GANs.

The "constraint" approach

The "constraint" approach keeps a model's behavior within desirable boundaries by applying external constraints. Three XAI methods use this approach: Rationalization, Intelligible Model, and Monotonicity.

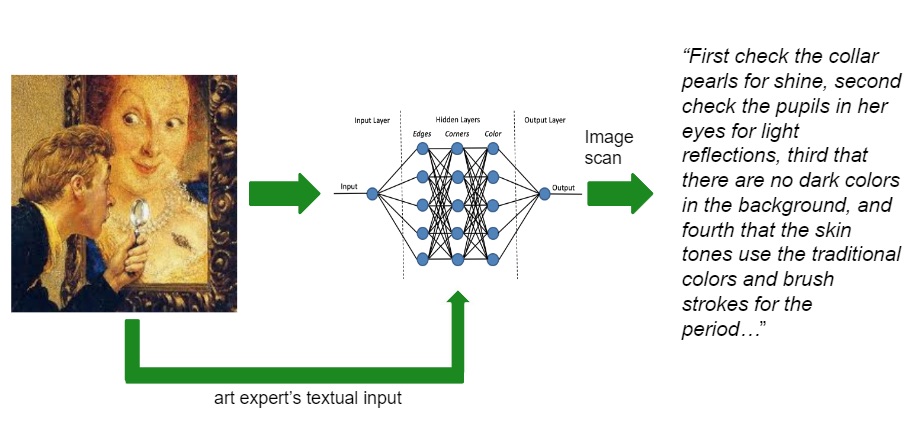

Rationalization provides a detailed, step-by-step description of how a neural net reaches its decision, similar to a human expert describing his/her train of thought while analyzing a painting, an X-ray, a piece of text, or a photograph. The description constraints, focuses, and continuously reduces the universe of possible alternatives until it reaches the final decision.

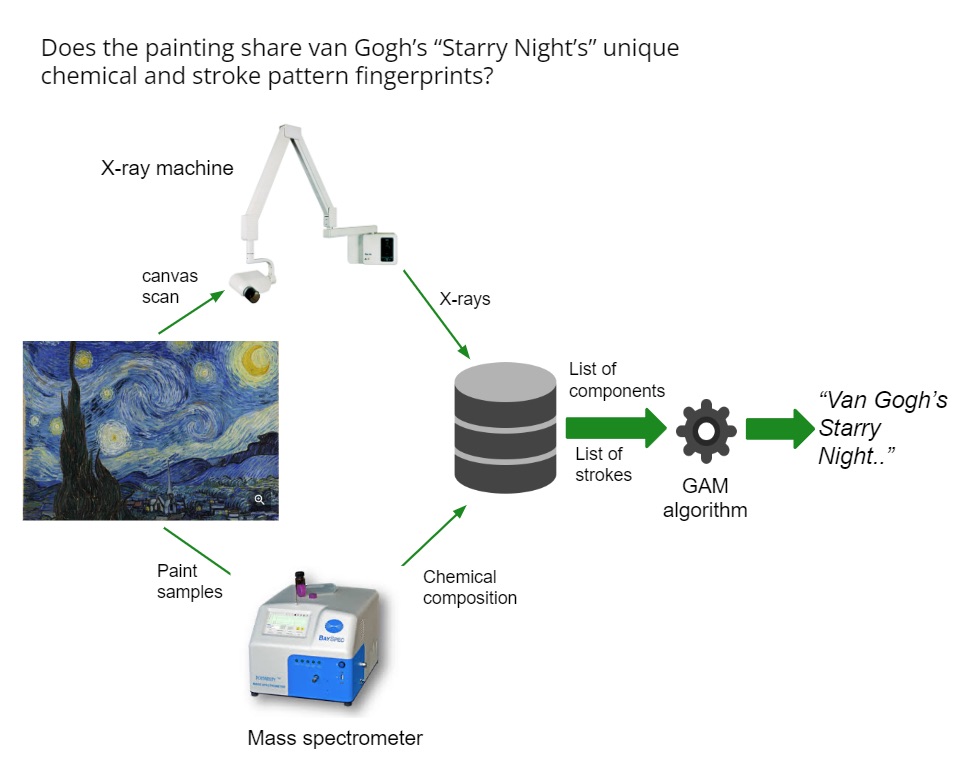

The Intelligible Model uses an entirely different approach. It reduces the problem to its simplest components, sizing the final tally statistically and using those results as a unique fingerprint or signature that identifies the final decision. For example, identifying the original painter by deconstructing a painting into the individual amounts and types of paints, types of brushes, and strokes used to paint it. Also, analyzing a novel into individual words and their frequencies to identify the author. We finally ensure that the results fall within the boundaries that characterize the author's "style."

The idea underlying the third method, Monotonicity, is a bit harder for me to explain. It is close to the concept of "generalization". Let me give you an example and it will hopefully make it easier to understand. Da Vinci's Mona Lisa is as iconic an example of a famous painting as we can imagine. It has been imitated by other famous painters many times in the last 500 years. But each of those paintings, regardless of their particular painter's style, can still be recognized by humans as a version of the original "Mona Lisa." But how can a neural network accomplish such a feat? In other words, to what extent can we alter the "Mona Lisa" while still preserving the "essence" of the concept of the "Mona Lisa"? In music, a monotonic voice holds the same central "pitch" throughout a melody. In our example, all the paintings are identified as "Mona Lisas" despite their different styles.

"Perturbation"

This is where our second approach comes along, known as "perturbation," it monitors a model's outputs while probing its decision-making boundaries, thus allowing it to figure out how perturbations to the model's inputs affect its decisions.

There are two XAI methods based on "perturbation," known as "Axiomatic Attribution" and "Counterfactual Method."

Axiomatic Attribution answers the question: when a neural network starts perceiving an image or a piece of text, at what point does an object or a concept first become recognizable? In other words, what is the minimum amount of information required to "perturb" a neural net for it to recognize a version of the Mona Lisa as such?

The Counterfactual Method, as the name implies, is based on the notion of "counterfactuals." Counterfactuals are any inputs that are contrary to the facts, that is, not part of reality. They are central to posing "what if" questions: if the past had been different in some way (counterfactual), what would the present be like now? If Da Vinci had painted the Mona Lisa without her mysterious smile, would it still be the Mona Lisa? To what extent can we perturb the original concept before it becomes unrecognizable by me, you, an expert or a neural network?

GANs

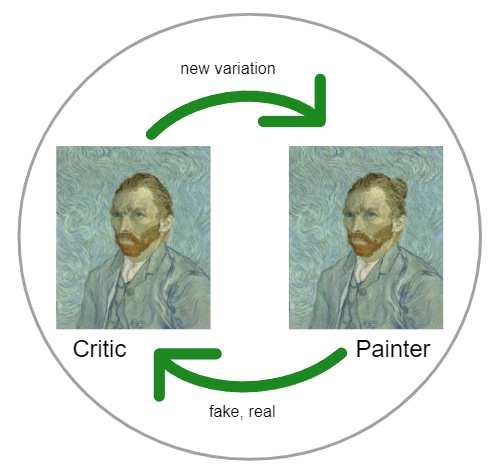

The "Generative Adversarial Networks" or GANs approach is the most complex of all. It uses two deep neural networks, one acting as a "student" and the other as a "teacher." They continuously test each other's decisions and knowledge gaps by alternating roles while constantly improving their questions and answers until both networks become such experts that they find nothing to teach to or learn from one another. Just like some of us studied for SATs with our friends, by quizzing each other. The method used is known as "Feature Visualization."

Both neural networks continuously keep track of what they learn from each other and how they learn it, thus providing insight into the expert's black-box way of thinking.

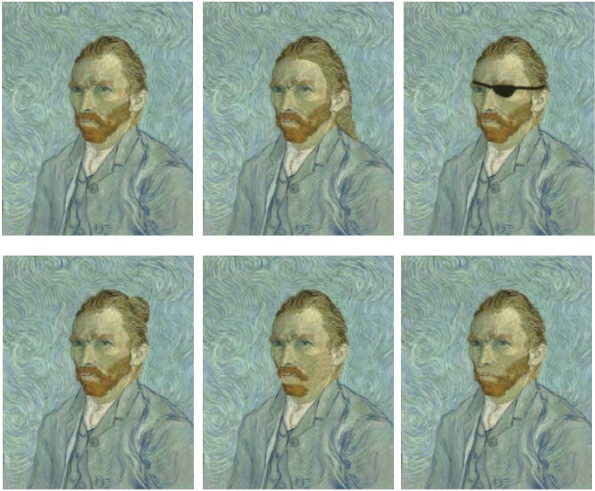

Suppose we trained a neural net to recognize every known van Gogh painting as a "genuine" van Gogh, and every other painting, including high-quality imitations, as "fake" van Goghs. Let's call this net the "Critic." The Critic is also able to explain how to paint a genuine van Gogh stroke by stroke. And suppose we partially train another neural net to both generate and recognize actual van Goghs, but not as accurately as the Critic. Let's call this second net the "Apprentice."

Next, we have the Apprentice generate a van Gogh while the Critic is watching. As the Apprentice makes mistakes, the Critic immediately notices and warns the Apprentice about them in detail. The Apprentice self-corrects and keeps tabs on its corrections. They play adversarial roles. Eventually, the Apprentice becomes as good as if not better than the Critic at generating perfect van Goghs.

Finally, after the Critic stops correcting the Apprentice, we have them switch roles and start the cycle again. As the nets train each other, they become better and better master van Gogh painters! And we have detailed instructions generated by both nets on how to both create and identify perfect van Gogh paintings.

New Legislation

Why do we need so many different approaches and methods to do XAI? Simply because, like human psychoanalysis, one approach/method is not enough to capture all the required nuances of what is going on inside a deep learning model. If I were to describe your personality using results from one psychoanalysis test, it wouldn't be a good depiction of who you are. There are, in fact, many other emerging methods we have not even addressed.

We briefly mentioned that there are already several laws around the world that protect people's "right to an explanation." The "Equal Credit Opportunity Act" in the US, for example, compels creditors to provide applicants with clear reasons why their credit application was denied beyond simply quoting the creditors' internal policies.

The European Union currently enforces their "General Data Protection Regulation," which states that a data subject has the right to have the reasons behind legal or financial decisions reached by automated systems impacting him/her clearly explained.

Lastly, France's "Digital Republic Act" regulates legal and administrative decisions made by public sector organizations about an individual, where the decision-making process need not be fully automated, giving the individual a right to a clear explanation of the details behind the entire process.

Summary

XAI is becoming essential in many areas. In the future, all AI systems will be able to explain the reasoning behind their decisions. In some cases, their chain of reasoning may be so long that it will be well beyond any human's capacity to understand, but still, it can be summarized and allow us to understand how these mechanisms work. I think that the industry is advancing in the right direction, however, there is still a lot of work to be done.